GridStreams High Performance Computing Server

GridStreams - High Performance Computing GPU Servers

High Performance Computing (HPC) servers are a crucial key component to solve many industry challenges for managing, delivering, and analyzing data efficiently in the Artificial Intelligence race.

GridStreams is designed to solve critical thermal issues commonly recognized in other GPU servers. Featured from AMD’s EPYC CPU, consumer, and enterprise GPUs to provides 128 PCIe 3.0 lanes per CPU, GridStreams maximizes performance, minimizes data risk, and produces a powerful cost-effective solution.

Powered by AMD EPYC

- Up to 32 high-performance "Zen" cores

- Eight DDR4 channels per CPU

- Up to 2TB RAM per CPU

- 128 Gen-3 PCIe lanes

- Dedicated security subsystem

- Integrated chipset

- Socket-compatible with next-gen EPYC processors

High Performance and Density

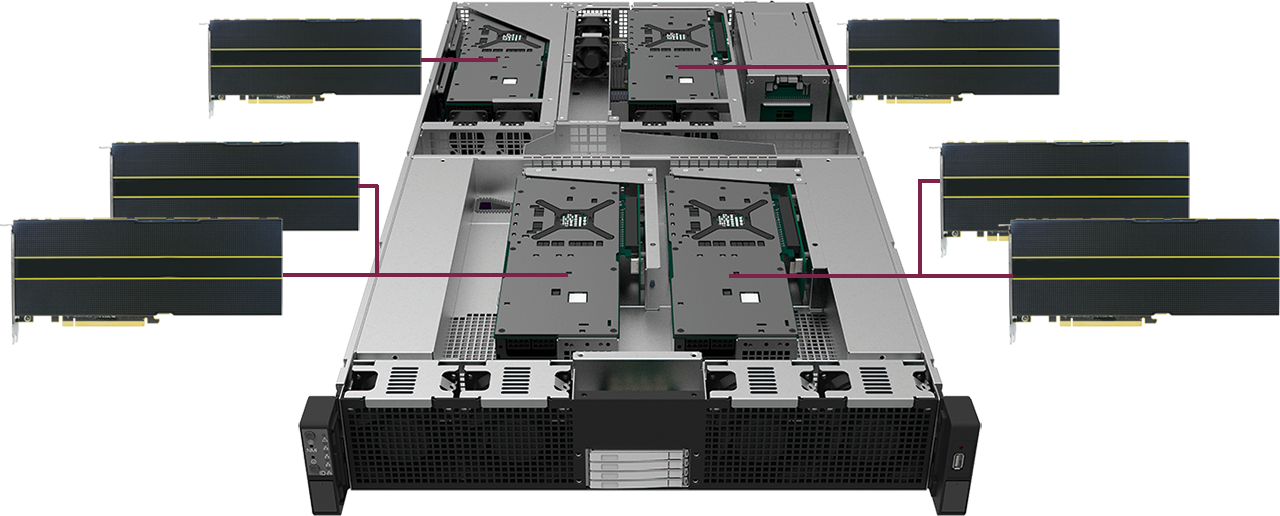

A GPU server is a single computer system that connects multiple Graphics Processing Units (GPU) together in order to achieve high throughput calculations to tackle large computational applications. The GridStreams GPU server features an innovative design that fits up to 6 consumer and/or enterprise cards in a 2U rack space for optimal density without sacrificing power redundancy safety measures.

Traditionally, GPUs are dedicated microprocessors that are utilized to manipulate computer images in real-time through a graphics pipeline. Because of its parallel architecture featuring thousands of highly specialized cores, a GPU is well-suited for rendering high-resolution display images outputting millions of pixels. The capability of GPUs to process multiple streams simultaneously for specific tasks (e.g. image processing) are now programmable to calculate for different functional algorithms resulting in increased applications for scientific and research purposes.

General Purpose GPU (GPGPU) computing is widely regarded for its ability to analyze massive amounts of specific dataset and mathematic calculations to accelerate Central Processing Unit (CPU) computing. This co-processing partnership allows the CPU to run underlying applications while offloading compute-intensive and time consuming workloads to the GPU which excels partly because of the thousands of smaller specialized cores compared to the larger general cores of a CPU.

*GridStreams with 6 GPU Graphic Cards

GPUs are able to calculate through these mathematical operations through what is called Floating Point Arithmetic, the computational standard of nearly all modern computer systems defined by IEEE-754. The speed in which these math problems are measured are in Floating Operations per Second (FLOPS). Modern individual GPUs are currently capable of calculating up to TeraFLOPS, which are a trillion floating point operations per second. When put all together in a single GridStreams GPU server, the total output is able to generate over 60 TeraFLOPS under single-precision performance translating to 60 trillion operations per second.

High Performance Computing and Its Impact on Future Technology.

High Performance Computing (HPC) is the aggregation of computing power into a single system to deliver much higher compound performance than typical workstations. This is possible by combining multiple computing servers into a massively parallel system where all processors work together in a cluster to solve large computational problems. Historically, CPUs were the major driving force behind HPC in order to get the necessary processing performance. However, since the introduction of GPGPU and its ever increasing price/performance ratio, it has become essential to utilize heterogeneous computing designs to achieve future workloads beyond PetaFLOPS.

*GridStream Rear with Dual Hot-swappable Power Redundancy

HPC servers leveraging multiple GPUs in turn have had a profound increase in processing performance as the industry forges ahead into Exascale computing (i.e., Quintillion Floating Operations per Second). What this means for future technology is the ability to more realistically model and simulate the processes of quantum mechanics, climatology, medicine, and many other fundamental scientific applications for advancing new discoveries and understanding the universe.

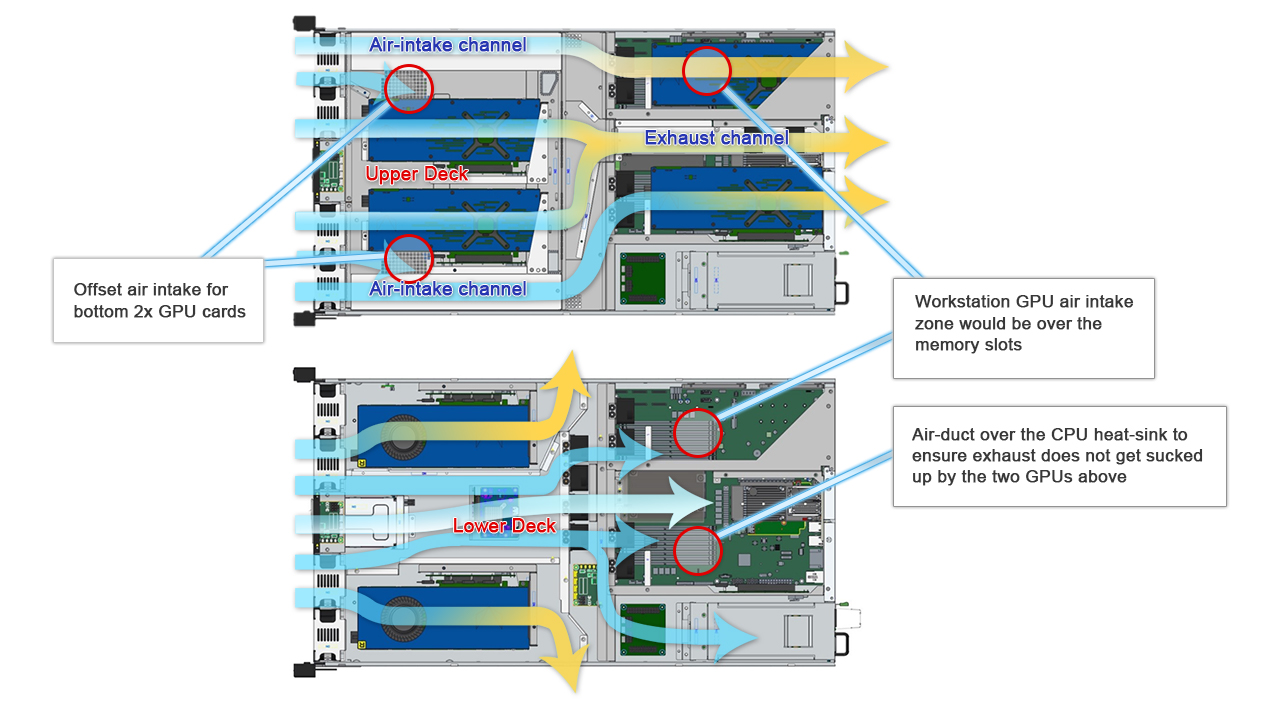

Versatile Cooling and Cost Effective

GPUs are making a breakthrough for HPC development and introduces new boundaries for deep learning, artificial intelligence, and computational science. However, by adding multiple GPUs into a single server it becomes difficult to properly manage heat density. The large amounts of electrical power consumed by each GPU is directly converted into heat which requires intensive cooling to keep components within operating temperature limits or else face potential malfunction and possible permanent failure.

Proper thermal design of a server will ensure that each component is sufficiently cooled to prolong the lifetime of system as a whole. That is why GridStreams implements a unique cooling design that provides each GPU its own individual intake and exhaust channels unlike other deep learning servers that pack multiple GPUs together creating inefficient hot zones causing component and performance degradation. Gridstreams ensures that each channel is provided fresh air to cool components and reduce any potential performance throttling for optimal operating efficiency.